Finding boulders in satellite imagery using machine learning (a.k.a. FART)

|

|

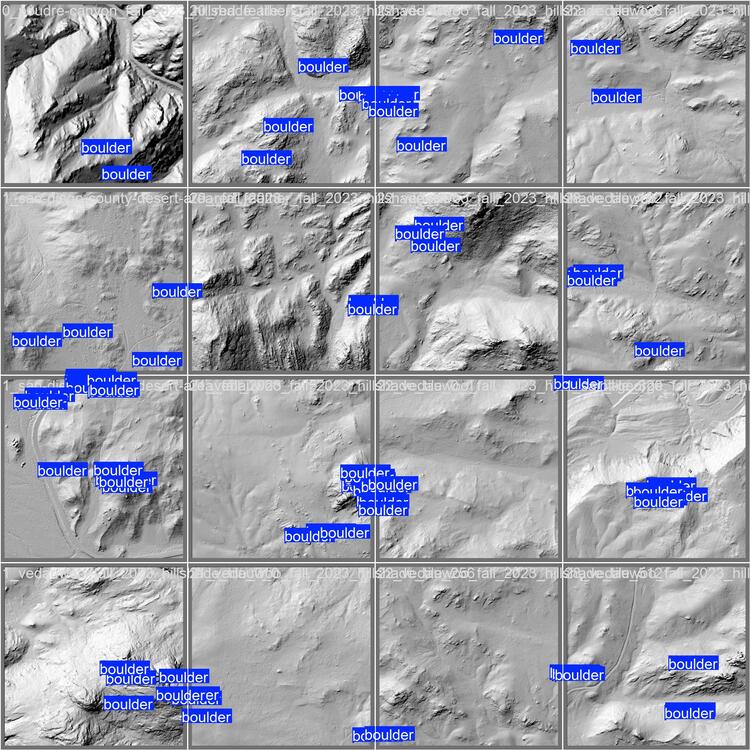

This is a project I spent about 500-600 hours on. it is focused on bouldering but is a concept that can be applied generally. It is an object detection ML model built in Python. I have seen some 'Data Science' projects posted to Mountain Project and a lot of them seem pretty surface-level. When I got this project to more of a complete step, I wanted to share it to encourage other people to hopefully develop some more interesting analyses. Finding Awesome boulders RemoTely (FART) I have spent hundreds of hours looking for new boulders or cliffs to develop, both hiking around and at home. Since moving out West, I realized satellite imagery is pretty reliable to use as a guide given (a) many areas don't have issues with forest coverage, at least compared to the east coast and (b) satellite imagery in the past decade has increased substantially in terms of resolution. The tool I built (FART) is replicating what I do manually: find an established area in satellite imagery and compare it to a new area in satellite imagery visually. If I know a huge boulder in area a, and it looks similar to a potential boulder in area b, I can assign a higher probability to the boulder in area b being a climbable boulder. This is making some implicit assumptions like the height of a boulder being ignored essentially, unless there are shadows in the image or something. FART attempts to replicate my manual process. If the above is confusing: if you give FART a coordinate it will return an image with boxes on it if FART thinks there are boulders there. The github page is: GitHub page. Note that the true name is FART but due to this being public I don't name it FART on the github page. FART is the name though. For some more info on the training data generation and modeling process see: https://github.com/jlombard314159/boulderFinder#readme Scraping MP data The code in this GitHub has code to scrape mountain project. Please do not scrape MP data using this code; I can provide you the JSON data. There are substantially more data available now than with the OpenBeta dataset. I trained models using OpenBeta, or whatever that site is that had catalogued all MP data circa 2018 in JSON form, and was able to build a substantially better model using the current MP data. That being said, I believe my use of the MP data is to contribute to the climbing community and doesn't violate any sort of TOS according to the current robots.txt. Mountain project spatial data sucks The user interface for submitting GPS coordinates on Mountain Project is bad. When I submit a new route I can not enter a GPS coordinate by hand, instead you have to drag a map to the GPS location. On top of that, I realized recently that people have more difficulties with GPS and mapping than I expected. So even if the site didn't suck, there is no guarantee that user submitted information is precise. For this object detection model, precise means 'the point is exactly on top of the boulder'. A lot of my time was spent building the training data set given the lack of high quality GPS data. I rely on user submitted GPS data since it was substantially more difficult than I thought to hand label data myself. There are areas that I have been to multiple times that I misidentified at home on google maps compared to when I get a GPS coordinate in the field. So to some degree it is difficult to be precise. I am of the opinion that OnX purchased MP in order to eliminate a potential competitor and have no intentions to ever improve the site substantially, so with regards to submitting new routes I don't anticipate the GPS data to improve much. In my opinion, reinstating the API would provide more opportunities for projects like this to come to fruition. Reproducibility It is important to note that reproducing the model, in terms of generating a .pt file to run on your own, is difficult due to the training data generation time and computational intensity. This is in addition to the fact that you need a good GPU to train the ML model. I can provide the scraped information in JSON format, and I can provide my trained .pt models, but the full training data set with .png images is way too large to send or for me to want to host (50gb or so). If there is a huge demand to get the full set of training data I can figure out a solution but I prefer to not do it unless there is demand. I would have loved to build a web application to allow people to play with the model, and I can do that, but there is no cheap way to do this unfortunately. Mainly due to the computational cost of running a ML model. So it's a bit of a blue-ball here since people can't use FART. If you want to use FART you can email me and I can train the model on a specified area, assuming there is good GPS coordinates, and predict anywhere you want in that area. I use Linode for deploying stuff and it seemed like this would be a $50/month or more cost. Model summary I am new to computer vision modeling, but in the real world I would provide metrics like a true positive value or some sort of validation metric to speak to the model's strength. I didn't do that for this project and am instead relying on looking at predictions manually. FART works well in the areas that I had a lot of training data for and if that data was accurate to begin with. This is mainly Unaweep Canyon. The people who submit GPS coordinates and boulders for Unaweep are Gods among humans as their data is insanely accurate. I trained the model on 0 data points from the East Coast, but the main problem would be selecting satellite imagery from the winter months where forest cover is not an issue. Overall, the model isn't that great as you will see with some examples below. It is mainly a training data set problem as I really don't think any sort of model would substantially change predictions here. There were differences in model performance based on the satellite imagery source. I compared trained models from the MapBox API and the Google Earth API. Mapbox was much, much better, which is an observation that others in the ML satellite imagery space have noted as well (there is a citation for this somewhere but I can't recall where I read it). I was hoping the USGS earth explorer would provide a nice API to use but AFAIK it would involve a lot of manual downloading. The MapBox and Google Earth API are just so much more friendly to use in an automated fashion. FART in action Here is an example from Unaweep. See how great it looks? The model was able to successfully identify a bathroom as a boulder but miss the Big Bend bouldering area outside of Moab. Some more examples are on the GitHub page. Don't know why the images are so fuzzy on MP. Anyways - always more to iterate on with some bullshit ML model, but for now I won't likely spend more time on this. I do use it occasionally on my bouldering explorations but it's not as precise as I would like. Happy to answer any questions. |

|

|

John Lombardi wrote: I prefer wondering around and stumbling onto stuff, less screen time. |

|

|

Tradiban wrote: Good for you Tradi. No one cares. You should probably stick to the threads where you can complain about how nasty and worthless everyone is on the forums instead of being the first one to reply to everybody with useless condescending posts. OP: This thing is pretty neat! Good luck on the guidebook, it would be awesome if this helps. Looking forward to diving into the github page when I have some free time. |

|

|

Tradiban wrote: Cool. Thanks for your valuable input. OP: I’m also newish to vision modeling but have experience in other data science and remote sensing but I’ll check your work out when I have some time! In regards to the GPS locations, you really need to average your location for at least two minutes to get a good estimate of location. Even then you’re still likely within 30 feet assuming a decent handheld GPS unit. Have you done any testing or seen any results comparing rocky protrusions that are small vs. taller objects? |

|

|

John Lombardi wrote: Awesome work! Do you know if the bathroom was identified as V1 or V2? |

|

|

Tradiban wrote: Yeah, many of us do wonder about you.... Quoted you, because I can, in spite of your ignore user. If someone quotes me, and you then see it, bump the some climbers and their kids thread, eh? That was quite nice! OP, most of what you've done is entirely out of my wheelhouse, generally, but your effort and what you are/were shooting for is quite interesting! FWIW, my son has a longstanding interest in the wandering around approach, but couples that with many hours scrutinizing satellite imagery. That started 10-15 years ago when he was the sound guy at a mega church. Once it got to the multiple iterations of the actual service, he had lots of time he was paid to just be there. He found a whole lot of interstate stuff. These days, he looks for wild hot springs for his hikes in. There's way more of that here than one can find listed anyplace. Those start with greenery that wouldn't be snowmelt. Have fun! Best, Helen |

|

|

Cool experiment. On a few occasions I have experimented with FART as well. All to often my fart turned into a shart. |

|

|

John Tex wrote: No need to be negative, I'm just saying it's too good, it takes away the "adventure". |

|

|

Ben M wrote: I assume for most GPS locations people are recording them in the field and then correcting them based on google maps or whatever after the fact, assuming they check it at all. At least that is what I do. But I think you are right for the vast majority of points - it isn't that accurate. For Vedauwoo, as an example, there are weirdly 50 or so routes/boulders that have some default GPS location that is within the Vedauwoo boundary but in the middle of nowhere. So there are some other oddities with respect to QA/QC'ing the data. To answer your question on leaf off imagery with how I answer most ML questions: with enough training data it should work. A minor difficulty is ensuring you only predict on leaf off imagery, as well as ensuring you are using leaf off imagery for training. That isn't too difficult with some of the satellite imagery APIs. The bigger hurdle is the effect of shadows and it is unknown to me if shadows hurt FART or help. There are commercial satellite imagery companies that I looked into that sell guaranteed no shadow - I forget the term but the angle of the satellite is exactly overhead of the area it captured an image of. Unfortunately the commercial imagery is expensive, even to get, say, one county worth of data (in the thousands of dollars). Similar to how transfer learning is used for a lot of image classification problems, I would use a transfer learning approach for any sort of new training data. That at least worked well when I was testing the trained model by training it again on data from Red Feather Lakes (not on MP but some boulders I put up and cleaned and then hand labeled), and noticing it performed better after taking that transfer learning approach. I noticed with some areas that just happened to have worse shadows that the model performed worse there, and I am assuming FART is looking for: (a) shadows in some capacity, (b) transitions in color. The shadows seem to matter since cars and in my above post the bathroom get classified as boulders occasionally. @amarius The bathroom is definitely V1. I use this tool as somewhat of an afterthought when I am looking for new places to go. Wandering around is more productive, but it's not that efficient. I have missed great boulders by 100 feet before. I use 2-3 satellite imagery sources to get a good feel for an area, then add in a quick prediction or two with the tool. The Larimer County property assessor has 1cm satellite imagery, which must be commercial, and that is way more helpful than any sort of ML model. On top of that, their ArcGIS mapper has a slider for different years, as well as the property information that is up to date. So in terms of the niche activity that is exploring for boulders, having high quality satellite imagery that is free matters more. |

|

|

I think this is cool John. I also think google will just have hi-res 3d imagery everywhere before this model becomes too useful, but I could be wrong! |

|

|

You are obviously a brilliant person! As such, you rightfully already thought of and disregarded as names for your system: Seeking High - Interest Testpieces Boulder Research - Establishing A Satellite Testing Service Probable Entity - Not In Service Yet |

|

|

Kevin Kent wrote: Kevin - great point. It's interesting that Google and I think Microsoft, probably others, are competing in this space. Microsoft partnered with https://blackshark.ai/ to use this type of 3d imagery rendering for Microsoft Flight Simulator 2020. It looks really good for Big Bend since it's right off the road (I assume). Or I might be using it wrong, since it looks like shit here (National Forest near me): The ML to render 2d to 3d is way beyond me! I just want cheap or free high quality satellite imagery! |

|

|

Have you tried making a model using a Lidar bare-earth DEM rather than visible spectrum imagery? It would probably be way easier for ML to recognize boulders based on volume/relief characteristics than sun/shade characteristics, and the ability to 'delete' the tree cover can feel godlike. Data availability will be an issue but nationwide coverage is supposedly in the works. |

|

|

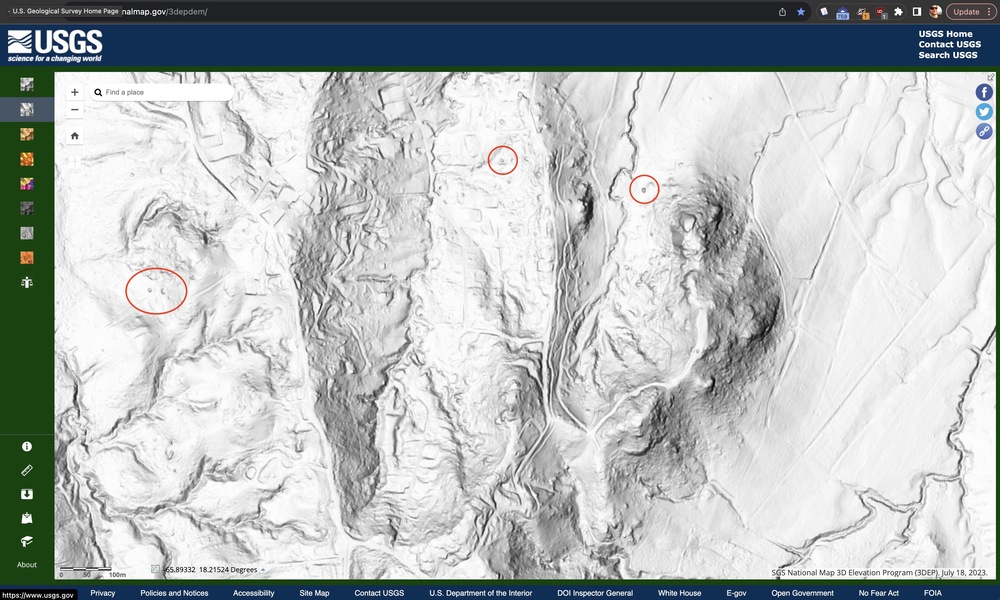

To provide some LIDAR tools for those who don't know the trove of data the US government offers: Detailed shaded maps of terrain based on LIDAR data are available at https://apps.nationalmap.gov/3depdem/ Here's an example map if you zoom in and there's data there. You can see boulders as little bumps scattered around the landscape. You can also see the roads, house plots, and trails. LIDAR coverage is shown on this map: |

|

|

Tradiban wrote: Awwwww, Sportiban has feelings?! How could yall be mean to such a positive guy that always has so, so much to add to everything? |

|

|

Really cool use of tech! |

|

|

Heliodor Jalba wrote: Thanks for the link. I luckily still have a .edu email address so I can still download the 1m hillshade from USGS. I looked manually at a couple areas and it does look more promising than I thought it would be. I am likely going to either manually download the DEMs or try and download via the OpenTopo API and retrain on a DEM model. Like you and another poster mentioned the DEM is probably better for a ML model anyway than just images. |

|

|

To all my loyal fans: FART 2.0 is in the works. I reworked the modeling pipeline to work with DEM and switched over to some more modern image detection models. If anyone has recommendations of guidebooks that have high quality GPS coordinates of specific boulder let me know. A couple more modern guidebooks, and by that I mean more recently released, have GPS coords for each boulder. They aren't always accurate but guidebook GPS seem to be more accurate than mountain project. Alternatively if you've submitted areas to mountain project that have actually good GPS coords let me know. I am not sure if I can deploy a model on my current linode server easily, but an end goal is to provide people with a way to get model predictions via the web. More to come! |

|

|

Nice work! You can probably build something to extract the coordinates from digital guidebooks (e.g. GunksApp) |

Continue with onX Maps

Continue with onX Maps Sign in with Facebook

Sign in with Facebook